100 changed files with 13143 additions and 566 deletions

+ 34

- 7

Dockerfile

View File

| # The first instruction is what image we want to base our container on | # The first instruction is what image we want to base our container on | ||||

| # We Use an official Python runtime as a parent image | # We Use an official Python runtime as a parent image | ||||

| FROM python:3.6 | |||||

| #FROM python:3.6 | |||||

| # FROM directive instructing base image to build upon | |||||

| FROM python:3.6.6 | |||||

| # The enviroment variable ensures that the python output is set straight | # The enviroment variable ensures that the python output is set straight | ||||

| # to the terminal with out buffering it first | # to the terminal with out buffering it first | ||||

| ENV PYTHONUNBUFFERED 1 | |||||

| #ENV PYTHONUNBUFFERED 1 | |||||

| # App directory | |||||

| RUN mkdir -p /usr/src/app | |||||

| WORKDIR /usr/src/app | |||||

| # Install azure event hub client dependencies | |||||

| #COPY p /usr/src/app/ | |||||

| # Bundle app source | |||||

| COPY . /usr/src/app | |||||

| # COPY startup script into known file location in container | |||||

| COPY start.sh /start.sh | |||||

| # create root directory for our project in the container | # create root directory for our project in the container | ||||

| RUN mkdir /esther_kleinhenz_ba | |||||

| #RUN mkdir /esther_kleinhenz_ba | |||||

| # EXPOSE port 8000 to allow communication to/from server | |||||

| #EXPOSE 8000 | |||||

| RUN set -x \ | |||||

| && buildDeps='curl gcc libc6-dev libsqlite3-dev libssl-dev make xz-utils zlib1g-dev' | |||||

| # Install any needed packages specified in requirements.txt | |||||

| RUN pip install -r requirements.txt | |||||

| # Set the working directory to /esther_kleinhenz_ba | # Set the working directory to /esther_kleinhenz_ba | ||||

| WORKDIR /esther_kleinhenz_ba | |||||

| #WORKDIR /esther_kleinhenz_ba | |||||

| # CMD specifcies the command to execute to start the server running. | |||||

| CMD ["/start.sh"] | |||||

| # done! | |||||

| # Copy the current directory contents into the container at /esther_kleinhenz_ba | # Copy the current directory contents into the container at /esther_kleinhenz_ba | ||||

| ADD . /esther_kleinhenz_ba/ | |||||

| #ADD . /esther_kleinhenz_ba/ | |||||

| # Install any needed packages specified in requirements.txt | |||||

| RUN pip install -r requirements.txt |

+ 2

- 2

application/forms.py

View File

| fields = ('title', 'text', 'published_date','tags') | fields = ('title', 'text', 'published_date','tags') | ||||

| class NewTagForm(forms.ModelForm): | class NewTagForm(forms.ModelForm): | ||||

| m_tags = TagField() | |||||

| tags = TagField() | |||||

| class Meta: | class Meta: | ||||

| model = CustomUser | model = CustomUser | ||||

| fields = ['m_tags'] | |||||

| fields = ['tags'] |

+ 2

- 12

application/templates/student_page.html

View File

| {% extends "base.html" %} {% block content %} {% load taggit_templatetags2_tags %} {% get_taglist as tags for 'application.post'%} | |||||

| {% extends "base.html" %} {% block content %} {% load taggit_templatetags2_tags %} | |||||

| {% get_taglist as tags for 'application.post'%} | |||||

| <div id=""> | |||||

| <ul> | |||||

| {% for tag in tags %} | |||||

| <li>{{tag}} | |||||

| <a class="btn btn-outline-dark" href="{% url 'tag_remove' tag.slug %}"> | |||||

| <span class="glyphicon glyphicon-remove">Remove</span> | |||||

| </a> | |||||

| </li> | |||||

| {{ result }} {% endfor %} | |||||

| </ul> | |||||

| </div> | |||||

| <div> | <div> | ||||

| <form class="post-form" method="post"> | <form class="post-form" method="post"> | ||||

| {% csrf_token %} {{form.as_p}} | {% csrf_token %} {{form.as_p}} |

+ 9

- 5

application/views.py

View File

| @login_required | @login_required | ||||

| def tag_remove(request, slug=None): | def tag_remove(request, slug=None): | ||||

| log = logging.getLogger('mysite') | |||||

| user_instance = get_object_or_404(CustomUser, user=request.user) | |||||

| log.info(u) | |||||

| tag = Tag.get_object_or_404(Tag, slug = slug) | |||||

| log.info(tag) | |||||

| if slug: | if slug: | ||||

| tag = get_object_or_404(Tag, slug=slug) | |||||

| tag.delete() | |||||

| user_instance.tags.remove(tag) | |||||

| save_m2m() | save_m2m() | ||||

| return redirect('student_page') | |||||

| return redirect('student_page') | |||||

| @login_required | @login_required | ||||

| def student_page(request): | def student_page(request): | ||||

| log = logging.getLogger('mysite') | log = logging.getLogger('mysite') | ||||

| user_instance = get_object_or_404(CustomUser, user=request.user) | user_instance = get_object_or_404(CustomUser, user=request.user) | ||||

| log.info(user_instance) | |||||

| if request.method == "POST": | if request.method == "POST": | ||||

| log.info('post method') | log.info('post method') | ||||

| form = NewTagForm(request.POST, instance=user_instance) | form = NewTagForm(request.POST, instance=user_instance) | ||||

| obj.save() | obj.save() | ||||

| tag_names = [tag.name for tag in Tag.objects.all()] | tag_names = [tag.name for tag in Tag.objects.all()] | ||||

| log.info(tag_names) | log.info(tag_names) | ||||

| m_tags = form.cleaned_data['m_tags'] | |||||

| m_tags = form.cleaned_data['tags'] | |||||

| m_tags = ' '.join(str(m_tags) for m_tags in m_tags) | m_tags = ' '.join(str(m_tags) for m_tags in m_tags) | ||||

| log.info(m_tags) | log.info(m_tags) | ||||

| if m_tags in tag_names: | if m_tags in tag_names: |

+ 0

- 0

busybox.tar

View File

+ 36

- 0

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/AcknowledgmentsDedicationSentence/acknowledgements.aux

View File

| \relax | |||||

| \providecommand\hyper@newdestlabel[2]{} | |||||

| \@writefile{toc}{\contentsline {chapter}{Acknowledgements}{iii}{chapter*.2}} | |||||

| \@setckpt{AcknowledgmentsDedicationSentence/acknowledgements}{ | |||||

| \setcounter{page}{4} | |||||

| \setcounter{equation}{0} | |||||

| \setcounter{enumi}{0} | |||||

| \setcounter{enumii}{0} | |||||

| \setcounter{enumiii}{0} | |||||

| \setcounter{enumiv}{0} | |||||

| \setcounter{footnote}{0} | |||||

| \setcounter{mpfootnote}{0} | |||||

| \setcounter{part}{0} | |||||

| \setcounter{chapter}{0} | |||||

| \setcounter{section}{0} | |||||

| \setcounter{subsection}{0} | |||||

| \setcounter{subsubsection}{0} | |||||

| \setcounter{paragraph}{0} | |||||

| \setcounter{subparagraph}{0} | |||||

| \setcounter{figure}{0} | |||||

| \setcounter{table}{0} | |||||

| \setcounter{float@type}{8} | |||||

| \setcounter{parentequation}{0} | |||||

| \setcounter{lstnumber}{1} | |||||

| \setcounter{ContinuedFloat}{0} | |||||

| \setcounter{subfigure}{0} | |||||

| \setcounter{subtable}{0} | |||||

| \setcounter{r@tfl@t}{0} | |||||

| \setcounter{Item}{0} | |||||

| \setcounter{Hfootnote}{0} | |||||

| \setcounter{Hy@AnnotLevel}{0} | |||||

| \setcounter{bookmark@seq@number}{2} | |||||

| \setcounter{NAT@ctr}{0} | |||||

| \setcounter{lstlisting}{0} | |||||

| \setcounter{section@level}{0} | |||||

| } |

+ 4

- 1

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.aux

View File

| \bibcite{King}{{Kin17}{}{{}}{{}}} | \bibcite{King}{{Kin17}{}{{}}{{}}} | ||||

| \bibcite{Leipner}{{Lei13}{}{{}}{{}}} | \bibcite{Leipner}{{Lei13}{}{{}}{{}}} | ||||

| \bibcite{Ndukwe}{{Ndu17}{}{{}}{{}}} | \bibcite{Ndukwe}{{Ndu17}{}{{}}{{}}} | ||||

| \bibcite{Ong}{{Ong18}{}{{}}{{}}} | |||||

| \bibcite{Shabda}{{Sha09}{}{{}}{{}}} | |||||

| \bibcite{Shelest}{{She09}{}{{}}{{}}} | \bibcite{Shelest}{{She09}{}{{}}{{}}} | ||||

| \providecommand\NAT@force@numbers{}\NAT@force@numbers | |||||

| \@writefile{toc}{\contentsline {chapter}{Referenzen}{19}{chapter*.11}} | \@writefile{toc}{\contentsline {chapter}{Referenzen}{19}{chapter*.11}} | ||||

| \bibcite{Timm}{{Tim15}{}{{}}{{}}} | |||||

| \providecommand\NAT@force@numbers{}\NAT@force@numbers |

+ 18

- 0

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.bbl

View File

| \newblock | \newblock | ||||

| https://medium.com/@nnennahacks/https-medium-com-nnennandukwe-python-is-the-back-end-programming-language-of-the-future-heres-why. | https://medium.com/@nnennahacks/https-medium-com-nnennandukwe-python-is-the-back-end-programming-language-of-the-future-heres-why. | ||||

| \bibitem[Ong18]{Ong} | |||||

| Selwin Ong. | |||||

| \newblock django-post\_office git repository. | |||||

| \newblock 2018. | |||||

| \newblock https://github.com/ui/django-post\_office/blob/master/AUTHORS.rst. | |||||

| \bibitem[Sha09]{Shabda} | |||||

| Shabda. | |||||

| \newblock Understanding decorators. | |||||

| \newblock 2009. | |||||

| \newblock https://www.agiliq.com/blog/2009/06/understanding-decorators/. | |||||

| \bibitem[She09]{Shelest} | \bibitem[She09]{Shelest} | ||||

| Alexy Shelest. | Alexy Shelest. | ||||

| \newblock Model view controller, model view presenter, and model view viewmodel | \newblock Model view controller, model view presenter, and model view viewmodel | ||||

| \newblock | \newblock | ||||

| https://www.codeproject.com/Articles/42830/Model-View-Controller-Model-View-Presenter-and-Mod. | https://www.codeproject.com/Articles/42830/Model-View-Controller-Model-View-Presenter-and-Mod. | ||||

| \bibitem[Tim15]{Timm} | |||||

| Damon Timm. | |||||

| \newblock django-hitcount documentation. | |||||

| \newblock 2015. | |||||

| \newblock https://django-hitcount.readthedocs.io/en/latest/overview.html. | |||||

| \end{thebibliography} | \end{thebibliography} |

+ 41

- 34

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.blg

View File

| A level-1 auxiliary file: chapters/fazit.aux | A level-1 auxiliary file: chapters/fazit.aux | ||||

| The style file: alpha.bst | The style file: alpha.bst | ||||

| Database file #1: ../references/References_2.bib | Database file #1: ../references/References_2.bib | ||||

| Repeated entry---line 78 of file ../references/References_2.bib | |||||

| : @article{Ong | |||||

| : , | |||||

| I'm skipping whatever remains of this entry | |||||

| Warning--empty journal in Dixit | Warning--empty journal in Dixit | ||||

| Warning--empty journal in Python | Warning--empty journal in Python | ||||

| Warning--empty journal in Gaynor | Warning--empty journal in Gaynor | ||||

| Warning--empty journal in King | Warning--empty journal in King | ||||

| Warning--empty journal in Leipner | Warning--empty journal in Leipner | ||||

| Warning--empty journal in Ndukwe | Warning--empty journal in Ndukwe | ||||

| Warning--empty journal in Ong | |||||

| Warning--empty journal in Shabda | |||||

| Warning--empty journal in Shelest | Warning--empty journal in Shelest | ||||

| You've used 8 entries, | |||||

| Warning--empty journal in Timm | |||||

| You've used 11 entries, | |||||

| 2543 wiz_defined-function locations, | 2543 wiz_defined-function locations, | ||||

| 611 strings with 6085 characters, | |||||

| and the built_in function-call counts, 2196 in all, are: | |||||

| = -- 216 | |||||

| > -- 64 | |||||

| < -- 8 | |||||

| + -- 16 | |||||

| - -- 16 | |||||

| * -- 106 | |||||

| := -- 390 | |||||

| add.period$ -- 32 | |||||

| call.type$ -- 8 | |||||

| change.case$ -- 40 | |||||

| chr.to.int$ -- 8 | |||||

| cite$ -- 16 | |||||

| duplicate$ -- 112 | |||||

| empty$ -- 161 | |||||

| format.name$ -- 32 | |||||

| if$ -- 426 | |||||

| 626 strings with 6410 characters, | |||||

| and the built_in function-call counts, 3011 in all, are: | |||||

| = -- 297 | |||||

| > -- 88 | |||||

| < -- 11 | |||||

| + -- 22 | |||||

| - -- 22 | |||||

| * -- 145 | |||||

| := -- 533 | |||||

| add.period$ -- 44 | |||||

| call.type$ -- 11 | |||||

| change.case$ -- 55 | |||||

| chr.to.int$ -- 11 | |||||

| cite$ -- 22 | |||||

| duplicate$ -- 154 | |||||

| empty$ -- 221 | |||||

| format.name$ -- 44 | |||||

| if$ -- 585 | |||||

| int.to.chr$ -- 1 | int.to.chr$ -- 1 | ||||

| int.to.str$ -- 0 | int.to.str$ -- 0 | ||||

| missing$ -- 8 | |||||

| newline$ -- 51 | |||||

| num.names$ -- 24 | |||||

| pop$ -- 48 | |||||

| missing$ -- 11 | |||||

| newline$ -- 69 | |||||

| num.names$ -- 33 | |||||

| pop$ -- 66 | |||||

| preamble$ -- 1 | preamble$ -- 1 | ||||

| purify$ -- 48 | |||||

| purify$ -- 66 | |||||

| quote$ -- 0 | quote$ -- 0 | ||||

| skip$ -- 88 | |||||

| skip$ -- 120 | |||||

| stack$ -- 0 | stack$ -- 0 | ||||

| substring$ -- 56 | |||||

| substring$ -- 77 | |||||

| swap$ -- 0 | swap$ -- 0 | ||||

| text.length$ -- 8 | |||||

| text.prefix$ -- 8 | |||||

| text.length$ -- 11 | |||||

| text.prefix$ -- 11 | |||||

| top$ -- 0 | top$ -- 0 | ||||

| type$ -- 64 | |||||

| warning$ -- 8 | |||||

| while$ -- 16 | |||||

| width$ -- 10 | |||||

| write$ -- 106 | |||||

| (There were 8 warnings) | |||||

| type$ -- 88 | |||||

| warning$ -- 11 | |||||

| while$ -- 22 | |||||

| width$ -- 14 | |||||

| write$ -- 145 | |||||

| (There was 1 error message) |

+ 3

- 3

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.lof

View File

| \babel@toc {german}{} | \babel@toc {german}{} | ||||

| \addvspace {10\p@ } | \addvspace {10\p@ } | ||||

| \addvspace {10\p@ } | \addvspace {10\p@ } | ||||

| \contentsline {figure}{\numberline {2.1}{\ignorespaces Vereinfachter MVP\relax }}{6}{figure.caption.5} | |||||

| \contentsline {figure}{\numberline {2.2}{\ignorespaces Request-Response-Kreislauf des Django Frameworks\relax }}{7}{figure.caption.6} | |||||

| \contentsline {figure}{\numberline {2.1}{\ignorespaces Vereinfachter MVP ([She09])\relax }}{6}{figure.caption.5} | |||||

| \contentsline {figure}{\numberline {2.2}{\ignorespaces Request-Response-Kreislauf des Django Frameworks ([Nev15])\relax }}{7}{figure.caption.6} | |||||

| \contentsline {figure}{\numberline {2.3}{\ignorespaces Erstellen der virtuelle Umgebung im Terminal\relax }}{8}{figure.caption.7} | \contentsline {figure}{\numberline {2.3}{\ignorespaces Erstellen der virtuelle Umgebung im Terminal\relax }}{8}{figure.caption.7} | ||||

| \contentsline {figure}{\numberline {2.4}{\ignorespaces Beispiel eines LDAP-Trees\relax }}{9}{figure.caption.8} | \contentsline {figure}{\numberline {2.4}{\ignorespaces Beispiel eines LDAP-Trees\relax }}{9}{figure.caption.8} | ||||

| \contentsline {figure}{\numberline {2.5}{\ignorespaces Einbindung von Bootstrap in einer HTML-Datei\relax }}{11}{figure.caption.9} | |||||

| \contentsline {figure}{\numberline {2.5}{\ignorespaces Einbindung von Bootstrap in einer HTML-Datei\relax }}{12}{figure.caption.9} | |||||

| \contentsline {figure}{\numberline {2.6}{\ignorespaces Bootstrap-Klassen in HTML-Tag\relax }}{12}{figure.caption.10} | \contentsline {figure}{\numberline {2.6}{\ignorespaces Bootstrap-Klassen in HTML-Tag\relax }}{12}{figure.caption.10} | ||||

| \addvspace {10\p@ } | \addvspace {10\p@ } | ||||

| \addvspace {10\p@ } | \addvspace {10\p@ } |

+ 160

- 117

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.log

View File

| This is XeTeX, Version 3.14159265-2.6-0.99999 (TeX Live 2018) (preloaded format=xelatex 2018.6.7) 15 OCT 2018 21:49 | |||||

| This is XeTeX, Version 3.14159265-2.6-0.99999 (TeX Live 2018) (preloaded format=xelatex 2018.6.7) 2 NOV 2018 22:59 | |||||

| entering extended mode | entering extended mode | ||||

| \write18 enabled. | \write18 enabled. | ||||

| file:line:error style messages enabled. | file:line:error style messages enabled. | ||||

| [] | [] | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 85. | |||||

| (babel) in language on input line 82. | |||||

| [2]) | [2]) | ||||

| \openout2 = `abstract/abstract.aux'. | \openout2 = `abstract/abstract.aux'. | ||||

| ] (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.toc) | |||||

| \tf@toc=\write6 | |||||

| \openout6 = `bachelorabeit_EstherKleinhenz.toc'. | |||||

| ] (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.toc | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 50. | |||||

| (babel) in language on input line 28. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 50. | |||||

| (babel) in language on input line 28. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 50. | |||||

| (babel) in language on input line 28. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 50. | |||||

| (babel) in language on input line 28. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 50. | |||||

| (babel) in language on input line 28. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 50. | |||||

| [3] | |||||

| (babel) in language on input line 28. | |||||

| [3]) | |||||

| \tf@toc=\write6 | |||||

| \openout6 = `bachelorabeit_EstherKleinhenz.toc'. | |||||

| Package Fancyhdr Warning: \headheight is too small (12.0pt): | |||||

| Make it at least 14.49998pt. | |||||

| We now make it that large for the rest of the document. | |||||

| This may cause the page layout to be inconsistent, however. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 50. | (babel) in language on input line 50. | ||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 50. | (babel) in language on input line 50. | ||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 50. | (babel) in language on input line 50. | ||||

| [4 | |||||

| ] | |||||

| [4] | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 52. | (babel) in language on input line 52. | ||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| [1 | [1 | ||||

| ] | ] | ||||

| \openout2 = `chapters/einleitung.aux'. | \openout2 = `chapters/einleitung.aux'. | ||||

| Missing character: There is no ̈ in font aer12! | Missing character: There is no ̈ in font aer12! | ||||

| Missing character: There is no ̈ in font aer12! | Missing character: There is no ̈ in font aer12! | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 12. | |||||

| [3] | [3] | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 13. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 13. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 11. | |||||

| (babel) in language on input line 13. | |||||

| Missing character: There is no ̈ in font aer12! | Missing character: There is no ̈ in font aer12! | ||||

| Missing character: There is no ̈ in font aer12! | Missing character: There is no ̈ in font aer12! | ||||

| ) | ) | ||||

| Package Fancyhdr Warning: \headheight is too small (12.0pt): | |||||

| Make it at least 14.49998pt. | |||||

| We now make it that large for the rest of the document. | |||||

| This may cause the page layout to be inconsistent, however. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 57. | (babel) in language on input line 57. | ||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 57. | (babel) in language on input line 57. | ||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 57. | (babel) in language on input line 57. | ||||

| [4] | |||||

| [4] | |||||

| \openout2 = `chapters/framework.aux'. | \openout2 = `chapters/framework.aux'. | ||||

| (./chapters/framework.tex | (./chapters/framework.tex | ||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 1. | (babel) in language on input line 1. | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 7. | |||||

| (babel) in language on input line 8. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 7. | |||||

| (babel) in language on input line 8. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 7. | |||||

| (babel) in language on input line 8. | |||||

| File: figures/MVP.png Graphic file (type bmp) | File: figures/MVP.png Graphic file (type bmp) | ||||

| <figures/MVP.png> | <figures/MVP.png> | ||||

| LaTeX Warning: `!h' float specifier changed to `!ht'. | LaTeX Warning: `!h' float specifier changed to `!ht'. | ||||

| LaTeX Font Info: Try loading font information for TS1+aer on input line 21. | |||||

| LaTeX Font Info: No file TS1aer.fd. on input line 21. | |||||

| LaTeX Font Warning: Font shape `TS1/aer/m/n' undefined | |||||

| (Font) using `TS1/cmr/m/n' instead | |||||

| (Font) for symbol `textbullet' on input line 21. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 22. | (babel) in language on input line 22. | ||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| ] | ] | ||||

| LaTeX Font Info: Try loading font information for TS1+aer on input line 22. | |||||

| LaTeX Font Info: No file TS1aer.fd. on input line 22. | |||||

| LaTeX Font Warning: Font shape `TS1/aer/m/n' undefined | |||||

| (Font) using `TS1/cmr/m/n' instead | |||||

| (Font) for symbol `textbullet' on input line 22. | |||||

| File: figures/request-response-cycle.png Graphic file (type bmp) | File: figures/request-response-cycle.png Graphic file (type bmp) | ||||

| <figures/request-response-cycle.png> | <figures/request-response-cycle.png> | ||||

| LaTeX Warning: `!h' float specifier changed to `!ht'. | LaTeX Warning: `!h' float specifier changed to `!ht'. | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 36. | |||||

| (babel) in language on input line 37. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 36. | |||||

| (babel) in language on input line 37. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 36. | |||||

| (babel) in language on input line 37. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 51. | |||||

| (babel) in language on input line 40. | |||||

| [6] | [6] | ||||

| Underfull \vbox (badness 10000) has occurred while \output is active [] | |||||

| Underfull \vbox (badness 2951) has occurred while \output is active [] | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 53. | |||||

| (babel) in language on input line 52. | |||||

| [7] | [7] | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 56. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 56. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 56. | |||||

| File: figures/virt-env-terminal.png Graphic file (type bmp) | File: figures/virt-env-terminal.png Graphic file (type bmp) | ||||

| <figures/virt-env-terminal.png> | <figures/virt-env-terminal.png> | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 70. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 70. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 70. | |||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 73. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 73. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 73. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 73. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 73. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 80. | |||||

| (babel) in language on input line 73. | |||||

| [8] | [8] | ||||

| File: figures/ldap-tree.png Graphic file (type bmp) | File: figures/ldap-tree.png Graphic file (type bmp) | ||||

| <figures/ldap-tree.png> | <figures/ldap-tree.png> | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 95. | |||||

| (babel) in language on input line 81. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 95. | |||||

| (babel) in language on input line 81. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 95. | |||||

| (babel) in language on input line 81. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 84. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 84. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 84. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 86. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 86. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 97. | |||||

| (babel) in language on input line 86. | |||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 86. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 86. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 86. | |||||

| [9] | [9] | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 118. | |||||

| (babel) in language on input line 104. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 118. | |||||

| (babel) in language on input line 104. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 118. | |||||

| Underfull \vbox (badness 10000) has occurred while \output is active [] | |||||

| (babel) in language on input line 104. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 120. | |||||

| (babel) in language on input line 108. | |||||

| [10] | [10] | ||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 115. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 115. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 115. | |||||

| File: figures/bootstrap-head-tag.png Graphic file (type bmp) | File: figures/bootstrap-head-tag.png Graphic file (type bmp) | ||||

| <figures/bootstrap-head-tag.png> | <figures/bootstrap-head-tag.png> | ||||

| File: figures/bootstrap-class-example.png Graphic file (type bmp) | |||||

| <figures/bootstrap-class-example.png> | |||||

| LaTeX Warning: `!h' float specifier changed to `!ht'. | LaTeX Warning: `!h' float specifier changed to `!ht'. | ||||

| ) | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 128. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 128. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 128. | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 128. | |||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| (babel) in language on input line 58. | |||||

| (babel) in language on input line 128. | |||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 58. | |||||

| [11] | |||||

| (babel) in language on input line 128. | |||||

| [11] | |||||

| File: figures/bootstrap-class-example.png Graphic file (type bmp) | |||||

| <figures/bootstrap-class-example.png> | |||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 140. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 140. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 140. | |||||

| ) | |||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 58. | (babel) in language on input line 58. | ||||

| Package babel Info: Redefining german shorthand "| | Package babel Info: Redefining german shorthand "| | ||||

| [] | [] | ||||

| Underfull \hbox (badness 10000) in paragraph at lines 54--60 | |||||

| Underfull \hbox (badness 10000) in paragraph at lines 54--58 | |||||

| []\T1/aer/m/n/12 Selwin Ong. django-post_office git re-po-si-to-ry. 2018. | |||||

| [] | |||||

| Underfull \hbox (badness 10000) in paragraph at lines 60--64 | |||||

| []\T1/aer/m/n/12 Shabda. Un-der-stan-ding de-co-ra-tors. 2009. | |||||

| [] | |||||

| Underfull \hbox (badness 10000) in paragraph at lines 66--72 | |||||

| []\T1/aer/m/n/12 Alexy She-lest. Mo-del view con-trol-ler, mo-del view pre- | []\T1/aer/m/n/12 Alexy She-lest. Mo-del view con-trol-ler, mo-del view pre- | ||||

| [] | [] | ||||

| Underfull \hbox (badness 10000) in paragraph at lines 54--60 | |||||

| Underfull \hbox (badness 10000) in paragraph at lines 66--72 | |||||

| \T1/aer/m/n/12 sen-ter, and mo-del view view-mo-del de-sign pat-terns. 2009. | \T1/aer/m/n/12 sen-ter, and mo-del view view-mo-del de-sign pat-terns. 2009. | ||||

| [] | [] | ||||

| Underfull \hbox (badness 10000) in paragraph at lines 54--60 | |||||

| Underfull \hbox (badness 10000) in paragraph at lines 66--72 | |||||

| \T1/aer/m/n/12 https://www.codeproject.com/Articles/42830/Model-View-Controller- | \T1/aer/m/n/12 https://www.codeproject.com/Articles/42830/Model-View-Controller- | ||||

| [] | [] | ||||

| ) | |||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 72. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 72. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 72. | |||||

| Package babel Info: Redefining german shorthand "f | |||||

| (babel) in language on input line 72. | |||||

| Package babel Info: Redefining german shorthand "| | |||||

| (babel) in language on input line 72. | |||||

| Package babel Info: Redefining german shorthand "~ | |||||

| (babel) in language on input line 72. | |||||

| [19]) | |||||

| Package atveryend Info: Empty hook `BeforeClearDocument' on input line 77. | Package atveryend Info: Empty hook `BeforeClearDocument' on input line 77. | ||||

| Package babel Info: Redefining german shorthand "f | Package babel Info: Redefining german shorthand "f | ||||

| (babel) in language on input line 77. | (babel) in language on input line 77. | ||||

| (babel) in language on input line 77. | (babel) in language on input line 77. | ||||

| Package babel Info: Redefining german shorthand "~ | Package babel Info: Redefining german shorthand "~ | ||||

| (babel) in language on input line 77. | (babel) in language on input line 77. | ||||

| [19] | |||||

| [20] | |||||

| Package atveryend Info: Empty hook `AfterLastShipout' on input line 77. | Package atveryend Info: Empty hook `AfterLastShipout' on input line 77. | ||||

| (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.aux (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/titlepage/titlepage.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/abstract/abstract.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/einleitung.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/framework.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/prototyp.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ergebnis.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ausblick.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/fazit.aux)) | (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.aux (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/titlepage/titlepage.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/abstract/abstract.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/einleitung.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/framework.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/prototyp.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ergebnis.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ausblick.aux) (/Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/fazit.aux)) | ||||

| Package atveryend Info: Empty hook `AtVeryEndDocument' on input line 77. | Package atveryend Info: Empty hook `AtVeryEndDocument' on input line 77. | ||||

| ) | ) | ||||

| (\end occurred when \ifnum on line 5 was incomplete) | (\end occurred when \ifnum on line 5 was incomplete) | ||||

| Here is how much of TeX's memory you used: | Here is how much of TeX's memory you used: | ||||

| 26561 strings out of 492970 | |||||

| 476474 string characters out of 6133939 | |||||

| 547394 words of memory out of 5000000 | |||||

| 30175 multiletter control sequences out of 15000+600000 | |||||

| 554220 words of font info for 61 fonts, out of 8000000 for 9000 | |||||

| 26572 strings out of 492970 | |||||

| 476605 string characters out of 6133939 | |||||

| 546800 words of memory out of 5000000 | |||||

| 30185 multiletter control sequences out of 15000+600000 | |||||

| 555998 words of font info for 62 fonts, out of 8000000 for 9000 | |||||

| 1348 hyphenation exceptions out of 8191 | 1348 hyphenation exceptions out of 8191 | ||||

| 58i,11n,50p,10437b,892s stack positions out of 5000i,500n,10000p,200000b,80000s | |||||

| 58i,12n,50p,10437b,943s stack positions out of 5000i,500n,10000p,200000b,80000s | |||||

| Output written on /Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.pdf (25 pages). | |||||

| Output written on /Users/Esthi/thesis_ek/doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.pdf (26 pages). |

+ 20

- 16

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.out

View File

| \BOOKMARK [1][-]{section.1.2}{Ziel\040der\040Arbeit}{chapter.1}% 5 | \BOOKMARK [1][-]{section.1.2}{Ziel\040der\040Arbeit}{chapter.1}% 5 | ||||

| \BOOKMARK [0][-]{chapter.2}{Framework}{}% 6 | \BOOKMARK [0][-]{chapter.2}{Framework}{}% 6 | ||||

| \BOOKMARK [1][-]{section.2.1}{Django}{chapter.2}% 7 | \BOOKMARK [1][-]{section.2.1}{Django}{chapter.2}% 7 | ||||

| \BOOKMARK [2][-]{subsection.2.1.1}{Besonderheiten}{section.2.1}% 8 | |||||

| \BOOKMARK [1][-]{section.2.2}{Erweiterungen}{chapter.2}% 9 | |||||

| \BOOKMARK [2][-]{subsection.2.2.1}{Taggable-Manager}{section.2.2}% 10 | |||||

| \BOOKMARK [1][-]{section.2.3}{Bootstrap}{chapter.2}% 11 | |||||

| \BOOKMARK [0][-]{chapter.3}{Prototyp}{}% 12 | |||||

| \BOOKMARK [1][-]{section.3.1}{Organisation}{chapter.3}% 13 | |||||

| \BOOKMARK [2][-]{subsection.3.1.1}{Verwaltung\040im\040Administrator-Backend}{section.3.1}% 14 | |||||

| \BOOKMARK [2][-]{subsection.3.1.2}{Berechtigung\040der\040User}{section.3.1}% 15 | |||||

| \BOOKMARK [1][-]{section.3.2}{Funktion}{chapter.3}% 16 | |||||

| \BOOKMARK [2][-]{subsection.3.2.1}{Abonnieren}{section.3.2}% 17 | |||||

| \BOOKMARK [2][-]{subsection.3.2.2}{Filtern}{section.3.2}% 18 | |||||

| \BOOKMARK [2][-]{subsection.3.2.3}{Benachrichtigung}{section.3.2}% 19 | |||||

| \BOOKMARK [0][-]{chapter.4}{Ergebnis}{}% 20 | |||||

| \BOOKMARK [1][-]{subsection.4.0.1}{Evaluierung}{chapter.4}% 21 | |||||

| \BOOKMARK [0][-]{chapter.5}{Zusammenfassung\040und\040Ausblick}{}% 22 | |||||

| \BOOKMARK [0][-]{chapter*.11}{Referenzen}{}% 23 | |||||

| \BOOKMARK [2][-]{subsection.2.1.1}{Besonderheiten\040Django's}{section.2.1}% 8 | |||||

| \BOOKMARK [2][-]{subsection.2.1.2}{Virtuelle\040Umgebung}{section.2.1}% 9 | |||||

| \BOOKMARK [2][-]{subsection.2.1.3}{Lightweight\040Directory\040Access\040Protocol}{section.2.1}% 10 | |||||

| \BOOKMARK [1][-]{section.2.2}{Erweiterungen}{chapter.2}% 11 | |||||

| \BOOKMARK [2][-]{subsection.2.2.1}{Taggable-Manager}{section.2.2}% 12 | |||||

| \BOOKMARK [2][-]{subsection.2.2.2}{Hilfsbibliotheken}{section.2.2}% 13 | |||||

| \BOOKMARK [1][-]{section.2.3}{Bootstrap}{chapter.2}% 14 | |||||

| \BOOKMARK [1][-]{section.2.4}{Cron}{chapter.2}% 15 | |||||

| \BOOKMARK [0][-]{chapter.3}{Prototyp}{}% 16 | |||||

| \BOOKMARK [1][-]{section.3.1}{Organisation}{chapter.3}% 17 | |||||

| \BOOKMARK [2][-]{subsection.3.1.1}{Verwaltung\040im\040Administrator-Backend}{section.3.1}% 18 | |||||

| \BOOKMARK [2][-]{subsection.3.1.2}{Berechtigung\040der\040User}{section.3.1}% 19 | |||||

| \BOOKMARK [1][-]{section.3.2}{Funktionen}{chapter.3}% 20 | |||||

| \BOOKMARK [2][-]{subsection.3.2.1}{Abonnieren}{section.3.2}% 21 | |||||

| \BOOKMARK [2][-]{subsection.3.2.2}{Filtern}{section.3.2}% 22 | |||||

| \BOOKMARK [2][-]{subsection.3.2.3}{Benachrichtigung}{section.3.2}% 23 | |||||

| \BOOKMARK [0][-]{chapter.4}{Ergebnis}{}% 24 | |||||

| \BOOKMARK [1][-]{subsection.4.0.1}{Evaluierung}{chapter.4}% 25 | |||||

| \BOOKMARK [0][-]{chapter.5}{Zusammenfassung\040und\040Ausblick}{}% 26 | |||||

| \BOOKMARK [0][-]{chapter*.11}{Referenzen}{}% 27 |

BIN

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.synctex.gz

View File

+ 8

- 4

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/bachelorabeit_EstherKleinhenz.toc

View File

| \contentsline {section}{\numberline {1.2}Ziel der Arbeit}{4}{section.1.2} | \contentsline {section}{\numberline {1.2}Ziel der Arbeit}{4}{section.1.2} | ||||

| \contentsline {chapter}{\numberline {2}Framework}{5}{chapter.2} | \contentsline {chapter}{\numberline {2}Framework}{5}{chapter.2} | ||||

| \contentsline {section}{\numberline {2.1}Django}{5}{section.2.1} | \contentsline {section}{\numberline {2.1}Django}{5}{section.2.1} | ||||

| \contentsline {subsection}{\numberline {2.1.1}Besonderheiten}{6}{subsection.2.1.1} | |||||

| \contentsline {section}{\numberline {2.2}Erweiterungen}{8}{section.2.2} | |||||

| \contentsline {subsection}{\numberline {2.2.1}Taggable-Manager}{10}{subsection.2.2.1} | |||||

| \contentsline {subsection}{\numberline {2.1.1}Besonderheiten Django's}{7}{subsection.2.1.1} | |||||

| \contentsline {subsection}{\numberline {2.1.2}Virtuelle Umgebung}{8}{subsection.2.1.2} | |||||

| \contentsline {subsection}{\numberline {2.1.3}Lightweight Directory Access Protocol}{8}{subsection.2.1.3} | |||||

| \contentsline {section}{\numberline {2.2}Erweiterungen}{9}{section.2.2} | |||||

| \contentsline {subsection}{\numberline {2.2.1}Taggable-Manager}{9}{subsection.2.2.1} | |||||

| \contentsline {subsection}{\numberline {2.2.2}Hilfsbibliotheken}{10}{subsection.2.2.2} | |||||

| \contentsline {section}{\numberline {2.3}Bootstrap}{11}{section.2.3} | \contentsline {section}{\numberline {2.3}Bootstrap}{11}{section.2.3} | ||||

| \contentsline {section}{\numberline {2.4}Cron}{12}{section.2.4} | |||||

| \contentsline {chapter}{\numberline {3}Prototyp}{13}{chapter.3} | \contentsline {chapter}{\numberline {3}Prototyp}{13}{chapter.3} | ||||

| \contentsline {section}{\numberline {3.1}Organisation}{13}{section.3.1} | \contentsline {section}{\numberline {3.1}Organisation}{13}{section.3.1} | ||||

| \contentsline {subsection}{\numberline {3.1.1}Verwaltung im Administrator-Backend}{13}{subsection.3.1.1} | \contentsline {subsection}{\numberline {3.1.1}Verwaltung im Administrator-Backend}{13}{subsection.3.1.1} | ||||

| \contentsline {subsection}{\numberline {3.1.2}Berechtigung der User}{13}{subsection.3.1.2} | \contentsline {subsection}{\numberline {3.1.2}Berechtigung der User}{13}{subsection.3.1.2} | ||||

| \contentsline {section}{\numberline {3.2}Funktion}{13}{section.3.2} | |||||

| \contentsline {section}{\numberline {3.2}Funktionen}{13}{section.3.2} | |||||

| \contentsline {subsection}{\numberline {3.2.1}Abonnieren}{13}{subsection.3.2.1} | \contentsline {subsection}{\numberline {3.2.1}Abonnieren}{13}{subsection.3.2.1} | ||||

| \contentsline {subsection}{\numberline {3.2.2}Filtern}{13}{subsection.3.2.2} | \contentsline {subsection}{\numberline {3.2.2}Filtern}{13}{subsection.3.2.2} | ||||

| \contentsline {subsection}{\numberline {3.2.3}Benachrichtigung}{14}{subsection.3.2.3} | \contentsline {subsection}{\numberline {3.2.3}Benachrichtigung}{14}{subsection.3.2.3} |

+ 1

- 1

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ausblick.aux

View File

| \setcounter{Item}{0} | \setcounter{Item}{0} | ||||

| \setcounter{Hfootnote}{0} | \setcounter{Hfootnote}{0} | ||||

| \setcounter{Hy@AnnotLevel}{0} | \setcounter{Hy@AnnotLevel}{0} | ||||

| \setcounter{bookmark@seq@number}{21} | |||||

| \setcounter{bookmark@seq@number}{25} | |||||

| \setcounter{NAT@ctr}{0} | \setcounter{NAT@ctr}{0} | ||||

| \setcounter{lstlisting}{0} | \setcounter{lstlisting}{0} | ||||

| \setcounter{section@level}{0} | \setcounter{section@level}{0} |

+ 1

- 1

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/ergebnis.aux

View File

| \setcounter{Item}{0} | \setcounter{Item}{0} | ||||

| \setcounter{Hfootnote}{0} | \setcounter{Hfootnote}{0} | ||||

| \setcounter{Hy@AnnotLevel}{0} | \setcounter{Hy@AnnotLevel}{0} | ||||

| \setcounter{bookmark@seq@number}{21} | |||||

| \setcounter{bookmark@seq@number}{25} | |||||

| \setcounter{NAT@ctr}{0} | \setcounter{NAT@ctr}{0} | ||||

| \setcounter{lstlisting}{0} | \setcounter{lstlisting}{0} | ||||

| \setcounter{section@level}{0} | \setcounter{section@level}{0} |

+ 1

- 1

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/fazit.aux

View File

| \setcounter{Item}{0} | \setcounter{Item}{0} | ||||

| \setcounter{Hfootnote}{0} | \setcounter{Hfootnote}{0} | ||||

| \setcounter{Hy@AnnotLevel}{0} | \setcounter{Hy@AnnotLevel}{0} | ||||

| \setcounter{bookmark@seq@number}{22} | |||||

| \setcounter{bookmark@seq@number}{26} | |||||

| \setcounter{NAT@ctr}{0} | \setcounter{NAT@ctr}{0} | ||||

| \setcounter{lstlisting}{0} | \setcounter{lstlisting}{0} | ||||

| \setcounter{section@level}{0} | \setcounter{section@level}{0} |

+ 12

- 8

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/framework.aux

View File

| \@writefile{lot}{\addvspace {10\p@ }} | \@writefile{lot}{\addvspace {10\p@ }} | ||||

| \newlabel{ch:framework}{{2}{5}{Framework}{chapter.2}{}} | \newlabel{ch:framework}{{2}{5}{Framework}{chapter.2}{}} | ||||

| \@writefile{toc}{\contentsline {section}{\numberline {2.1}Django}{5}{section.2.1}} | \@writefile{toc}{\contentsline {section}{\numberline {2.1}Django}{5}{section.2.1}} | ||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.1}{\ignorespaces Vereinfachter MVP\relax }}{6}{figure.caption.5}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.1.1}Besonderheiten}{6}{subsection.2.1.1}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.2}{\ignorespaces Request-Response-Kreislauf des Django Frameworks\relax }}{7}{figure.caption.6}} | |||||

| \@writefile{toc}{\contentsline {section}{\numberline {2.2}Erweiterungen}{8}{section.2.2}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.1}{\ignorespaces Vereinfachter MVP ([She09])\relax }}{6}{figure.caption.5}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.2}{\ignorespaces Request-Response-Kreislauf des Django Frameworks ([Nev15])\relax }}{7}{figure.caption.6}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.1.1}Besonderheiten Django's}{7}{subsection.2.1.1}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.1.2}Virtuelle Umgebung}{8}{subsection.2.1.2}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.3}{\ignorespaces Erstellen der virtuelle Umgebung im Terminal\relax }}{8}{figure.caption.7}} | \@writefile{lof}{\contentsline {figure}{\numberline {2.3}{\ignorespaces Erstellen der virtuelle Umgebung im Terminal\relax }}{8}{figure.caption.7}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.1.3}Lightweight Directory Access Protocol}{8}{subsection.2.1.3}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.4}{\ignorespaces Beispiel eines LDAP-Trees\relax }}{9}{figure.caption.8}} | \@writefile{lof}{\contentsline {figure}{\numberline {2.4}{\ignorespaces Beispiel eines LDAP-Trees\relax }}{9}{figure.caption.8}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.2.1}Taggable-Manager}{10}{subsection.2.2.1}} | |||||

| \@writefile{toc}{\contentsline {section}{\numberline {2.2}Erweiterungen}{9}{section.2.2}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.2.1}Taggable-Manager}{9}{subsection.2.2.1}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {2.2.2}Hilfsbibliotheken}{10}{subsection.2.2.2}} | |||||

| \@writefile{toc}{\contentsline {section}{\numberline {2.3}Bootstrap}{11}{section.2.3}} | \@writefile{toc}{\contentsline {section}{\numberline {2.3}Bootstrap}{11}{section.2.3}} | ||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.5}{\ignorespaces Einbindung von Bootstrap in einer HTML-Datei\relax }}{11}{figure.caption.9}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.5}{\ignorespaces Einbindung von Bootstrap in einer HTML-Datei\relax }}{12}{figure.caption.9}} | |||||

| \@writefile{lof}{\contentsline {figure}{\numberline {2.6}{\ignorespaces Bootstrap-Klassen in HTML-Tag\relax }}{12}{figure.caption.10}} | \@writefile{lof}{\contentsline {figure}{\numberline {2.6}{\ignorespaces Bootstrap-Klassen in HTML-Tag\relax }}{12}{figure.caption.10}} | ||||

| \@writefile{toc}{\contentsline {section}{\numberline {2.4}Cron}{12}{section.2.4}} | |||||

| \@setckpt{chapters/framework}{ | \@setckpt{chapters/framework}{ | ||||

| \setcounter{page}{13} | \setcounter{page}{13} | ||||

| \setcounter{equation}{0} | \setcounter{equation}{0} | ||||

| \setcounter{mpfootnote}{0} | \setcounter{mpfootnote}{0} | ||||

| \setcounter{part}{0} | \setcounter{part}{0} | ||||

| \setcounter{chapter}{2} | \setcounter{chapter}{2} | ||||

| \setcounter{section}{3} | |||||

| \setcounter{section}{4} | |||||

| \setcounter{subsection}{0} | \setcounter{subsection}{0} | ||||

| \setcounter{subsubsection}{0} | \setcounter{subsubsection}{0} | ||||

| \setcounter{paragraph}{0} | \setcounter{paragraph}{0} | ||||

| \setcounter{Item}{0} | \setcounter{Item}{0} | ||||

| \setcounter{Hfootnote}{0} | \setcounter{Hfootnote}{0} | ||||

| \setcounter{Hy@AnnotLevel}{0} | \setcounter{Hy@AnnotLevel}{0} | ||||

| \setcounter{bookmark@seq@number}{11} | |||||

| \setcounter{bookmark@seq@number}{15} | |||||

| \setcounter{NAT@ctr}{0} | \setcounter{NAT@ctr}{0} | ||||

| \setcounter{lstlisting}{0} | \setcounter{lstlisting}{0} | ||||

| \setcounter{section@level}{0} | \setcounter{section@level}{0} |

+ 2

- 2

doc/bachelorarbeit_EstherKleinhenz/.texpadtmp/chapters/prototyp.aux

View File

| \@writefile{toc}{\contentsline {section}{\numberline {3.1}Organisation}{13}{section.3.1}} | \@writefile{toc}{\contentsline {section}{\numberline {3.1}Organisation}{13}{section.3.1}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {3.1.1}Verwaltung im Administrator-Backend}{13}{subsection.3.1.1}} | \@writefile{toc}{\contentsline {subsection}{\numberline {3.1.1}Verwaltung im Administrator-Backend}{13}{subsection.3.1.1}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {3.1.2}Berechtigung der User}{13}{subsection.3.1.2}} | \@writefile{toc}{\contentsline {subsection}{\numberline {3.1.2}Berechtigung der User}{13}{subsection.3.1.2}} | ||||

| \@writefile{toc}{\contentsline {section}{\numberline {3.2}Funktion}{13}{section.3.2}} | |||||

| \@writefile{toc}{\contentsline {section}{\numberline {3.2}Funktionen}{13}{section.3.2}} | |||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.1}Abonnieren}{13}{subsection.3.2.1}} | \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.1}Abonnieren}{13}{subsection.3.2.1}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.2}Filtern}{13}{subsection.3.2.2}} | \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.2}Filtern}{13}{subsection.3.2.2}} | ||||

| \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.3}Benachrichtigung}{14}{subsection.3.2.3}} | \@writefile{toc}{\contentsline {subsection}{\numberline {3.2.3}Benachrichtigung}{14}{subsection.3.2.3}} | ||||

| \setcounter{Item}{0} | \setcounter{Item}{0} | ||||

| \setcounter{Hfootnote}{0} | \setcounter{Hfootnote}{0} | ||||

| \setcounter{Hy@AnnotLevel}{0} | \setcounter{Hy@AnnotLevel}{0} | ||||

| \setcounter{bookmark@seq@number}{19} | |||||

| \setcounter{bookmark@seq@number}{23} | |||||

| \setcounter{NAT@ctr}{0} | \setcounter{NAT@ctr}{0} | ||||

| \setcounter{lstlisting}{0} | \setcounter{lstlisting}{0} | ||||

| \setcounter{section@level}{0} | \setcounter{section@level}{0} |

BIN

doc/bachelorarbeit_EstherKleinhenz/bachelorabeit_EstherKleinhenz.pdf

View File

+ 4

- 1

doc/bachelorarbeit_EstherKleinhenz/chapters/einleitung.tex

View File

| \section{Ausgangssituation} | \section{Ausgangssituation} | ||||

| Alle Informationen der Fakultät Elektrotechnik Feinwerktechnik Informationstechnik, kurz efi, werden über die globalen Verteiler des Hochschulinternen Postfaches versendet. Viele dieser Daten sind jedoch nur für eine geringe Schnittmenge der Empfänger relevant und lassen sich nur schwer priorisieren. Das ständig überlastete Postfach muss somit regelmä"sig gepflegt werden. Einen massiven Administrativen Aufwand bedeutet es, E-Mails zu filtern und nach persönlichem Ermessen zu verwalten. | Alle Informationen der Fakultät Elektrotechnik Feinwerktechnik Informationstechnik, kurz efi, werden über die globalen Verteiler des Hochschulinternen Postfaches versendet. Viele dieser Daten sind jedoch nur für eine geringe Schnittmenge der Empfänger relevant und lassen sich nur schwer priorisieren. Das ständig überlastete Postfach muss somit regelmä"sig gepflegt werden. Einen massiven Administrativen Aufwand bedeutet es, E-Mails zu filtern und nach persönlichem Ermessen zu verwalten. | ||||

| ---genauer sagen woher ich mir sicher bin, dass das postfach überlastet ist | |||||

| Zudem leidet die Nachhaltigkeit der Informationen. Möchten die Empfänger ältere E-Mails abrufen, mussten diese meist schon entfernt werden um Platz für den neuen, eintreffenden E-Mail-Verkehr zu schaffen. | Zudem leidet die Nachhaltigkeit der Informationen. Möchten die Empfänger ältere E-Mails abrufen, mussten diese meist schon entfernt werden um Platz für den neuen, eintreffenden E-Mail-Verkehr zu schaffen. | ||||

| Diese Situation führt dazu, dass Empfänger die Informationen meist nicht lesen und sofort entfernen. Die Ersteller haben keinerlei Möglichkeiten zu überprüfen ob und wie viele Studierende und Dozenten eingehende Nachrichten öffnen und lesen. | Diese Situation führt dazu, dass Empfänger die Informationen meist nicht lesen und sofort entfernen. Die Ersteller haben keinerlei Möglichkeiten zu überprüfen ob und wie viele Studierende und Dozenten eingehende Nachrichten öffnen und lesen. | ||||

| ---Forschungsfrage | |||||

| \section{Ziel der Arbeit} | \section{Ziel der Arbeit} | ||||

| Ziel der Arbeit ist es, durch die Einbindung einer Social Media Plattform den Speicheraufwand des Hochschulpostfaches für Studierende der Efi-Fakultät zu reduzieren. Die Flut an E-Mails soll durch das Verwenden eines personalisierte Dashboard gedrosselt werden. Hierbei wird zunächst der Fokus auf die grundlegenden Funktionen der Website gelegt. Dazu gehört das Abonnieren, einpflegen von neuen und löschen von alten Nachrichten. | Ziel der Arbeit ist es, durch die Einbindung einer Social Media Plattform den Speicheraufwand des Hochschulpostfaches für Studierende der Efi-Fakultät zu reduzieren. Die Flut an E-Mails soll durch das Verwenden eines personalisierte Dashboard gedrosselt werden. Hierbei wird zunächst der Fokus auf die grundlegenden Funktionen der Website gelegt. Dazu gehört das Abonnieren, einpflegen von neuen und löschen von alten Nachrichten. | ||||

| Zudem sollen die Autoren benachrichtigt werden, in welchem Umfang die hochgeladenen Informationen bereits abonniert und gelesen wurden. | |||||

| Zudem sollen die Autoren benachrichtigt werden, in welchem Umfang die hochgeladenen Informationen bereits abonniert und gelesen wurden. | |||||

| ---zu kurz |

+ 13

- 0

doc/bachelorarbeit_EstherKleinhenz/chapters/ergebnis.tex

View File

| \label{ch:ergebnis} | \label{ch:ergebnis} | ||||

| \subsection{Evaluierung} | \subsection{Evaluierung} | ||||

| Eine weitere hilfreiche Erweiterung ist pylint. Das Tool sucht nicht nur nach Fehlern im Code, sondern versucht diesen sauber und einheitlich zu gestalten. Hierbei wird auf den Code-Standard PEP-8 geprüft [Dix18]. Die folgende Liste zeigt eine Kurzfassung der wichtigsten Regeln: | |||||

| \begin{itemize} | |||||

| \item Einrückung, meist 4 Leerzeichen | |||||

| \item Maximale Zeichenanzahl pro Zeile | |||||

| \item Zwei Leerzeile zwischen Klassen und Funktionen | |||||

| \item Eine Leerzeile zwischen Methoden innerhalb einer Klasse | |||||

| \item Leerzeichen in Ausdrücke und Anweisungen vermeiden | |||||

| \item Die Reihenfolge der Importe: Standartbibliotheken, Drittanbieterbibliotheken, Lokale Anwendungen | |||||

| \item Konventionen der Namensgebung von Funktionen, Modulen usw. | |||||

| \end{itemize} | |||||

| Natürlich sind dies Vorgaben, die eingehalten werden können, aber nicht notwendig sind um den Code fertig kompilieren und ausgeben zu lassen. |

+ 34

- 37

doc/bachelorarbeit_EstherKleinhenz/chapters/framework.tex

View File

| \chapter{Framework} | \chapter{Framework} | ||||

| \label{ch:framework} | \label{ch:framework} | ||||

| Um die Website-Erweiterung realisieren zu können, wird zunächst festgelegt welche Programmierschnittstellen verwendet werden. Im Web-Backend fällt die Wahl auf die objektorientierte Sprache Python, die ausschlie"slich Serverseitig anwendbar ist. Der Programmaufbau Pythons macht den Code leicht lesbar und der einfache Syntax ermöglicht einen strukturierte Implementierung der Website([Ndu17]). Die vielen abstrakten Datentypen, wie dynamische Arrays und Wörterbücher, sind gro"sflächig einsetzbar. | Um die Website-Erweiterung realisieren zu können, wird zunächst festgelegt welche Programmierschnittstellen verwendet werden. Im Web-Backend fällt die Wahl auf die objektorientierte Sprache Python, die ausschlie"slich Serverseitig anwendbar ist. Der Programmaufbau Pythons macht den Code leicht lesbar und der einfache Syntax ermöglicht einen strukturierte Implementierung der Website([Ndu17]). Die vielen abstrakten Datentypen, wie dynamische Arrays und Wörterbücher, sind gro"sflächig einsetzbar. | ||||

| --- Warum genau python? | |||||

| Ein entscheidender Vorteil hierbei ist das dazugehörige Framework Django, auf das im folgenden Kapitel genauer eingegangen wird. | Ein entscheidender Vorteil hierbei ist das dazugehörige Framework Django, auf das im folgenden Kapitel genauer eingegangen wird. | ||||

| ---Ist pyton nur serverseitig? | |||||

| \section{Django} | \section{Django} | ||||

| Django ist ein Web-Framework, das auf einer Model-View-Presenter (MVP) Architektur basiert. Ähnlich wie der Model-View-Controller sind die Interaktionen zwischen Model und View die Auswahl und Ausführung von Befehlen und das Auslösen von Ereignissen (vgl. Abbildung 2.1). Da die View aber hier bereits den Gro"steil des Controllers übernimmt, ist der MVP eine Überarbeitung. Der Teil, der Elemente des Modells auswählt, Operationen durchführt und alle Ereignisse kapselt, ergibt die Presenter-Klasse([She09]). Durch die direkte Bindung von Daten und View, geregelt durch den Presenter, wird die Codemenge der Applikation stark reduziert. | |||||

| Django ist ein Web-Framework, das eine schnelle, strukturierte Entwicklung ermöglicht und dabei ein einfaches Design beibehält. Der darin enthaltene Model-View-Presenter (MVP) kann, ähnlich wie der Model-View-Controller, die Interaktionen zwischen Model und View, die Auswahl und Ausführung von Befehlen und das Auslösen von Ereignissen steuern (vgl. Abbildung 2.1). Da die View aber hier bereits den Gro"steil des Controllers übernimmt, ist der MVP eine Überarbeitung. Der Teil, der Elemente des Modells auswählt, Operationen durchführt und alle Ereignisse kapselt, ergibt die Presenter-Klasse vgl. [She09]. Durch die direkte Bindung von Daten und View, geregelt durch den Presenter, wird die Codemenge der Applikation stark reduziert. | |||||

| \begin{figure}[!h] | \begin{figure}[!h] | ||||

| \centering | \centering | ||||

| \includegraphics[width=0.5\textwidth]{figures/MVP} | |||||

| \caption{Vereinfachter MVP} | |||||

| \includegraphics[width=0.6\textwidth]{figures/MVP} | |||||

| \caption{Vereinfachter MVP ([She09])} | |||||

| \hfill | \hfill | ||||

| \end{figure} | \end{figure} | ||||

| Der Prozess vom Anfragen der URL über den Server, bis hin zur fertig gerenderten Website kann wie folgt vereinfacht darstellen. Der User gibt eine URL im Browser ein und sendet sie an den Web-Server. | |||||

| Der Prozess vom Anfragen der URL über den Server, bis hin zur fertig gerenderten Website kann wie folgt vereinfacht dargestellt werden. | |||||

| Das Interface WSGI am Web-Server verbindet diesen mit dem Web-Framework, indem es den Request zum passenden Objekt weiterleitet. Hier wird der Applikation eine Callback-Funktion zur Verfügung gestellt [Kin17]. Au"serdem werden folgende Schritte durchgeführt: | |||||

| Der User gibt eine URL im Browser ein und sendet sie an den Web-Server. Das Interface WSGI am Web-Server verbindet diesen mit dem Web-Framework, indem es den Request zum passenden Objekt weiterleitet. Hier wird der Applikation eine Callback-Funktion zur Verfügung gestellt (vgl. [Kin17]). Au"serdem werden folgende Schritte durchgeführt: | |||||

| \begin{itemize} | \begin{itemize} | ||||

| \item Die Middleware-Klassen aus der settings.py werden geladen | \item Die Middleware-Klassen aus der settings.py werden geladen | ||||

| \item Die Methoden der Listen Request, View, Response und Excpetion werden geladen | \item Die Methoden der Listen Request, View, Response und Excpetion werden geladen | ||||

| \end{itemize} | \end{itemize} | ||||

| Der WSGI-Handler fungiert also als Pförtner und Manager zwischen dem Web-Server und dem Django-Projekt. | Der WSGI-Handler fungiert also als Pförtner und Manager zwischen dem Web-Server und dem Django-Projekt. | ||||

| Um die URL, wie weiter oben erwähnt, aufzulösen, benötigt WSGI einen urlresolver. Durch die explizite Zuweisung der vorhandenen Seiten, kann dieser über die regulären Ausdrücke der url.py-Datei iterieren. Gibt es eine Übereinstimmung, wird die damit verknüpfte Funktion in der View (view.py) aufgerufen. Hier ist die gesamte Logik der Website lokalisiert. Wie bereits erwähnt, ist es möglich unter Anderem auf die Datenbank der Applikation zuzugreifen und Eingaben des Users über eine Form zu verarbeiten. Nachdem werden die Informationen der View an das Template weitergereicht. Es handelt sich dabei um eine einfache HTML-Seite in der der strukturelle Aufbau im Frontend festgelegt wird. Die Informationen der View können hier zwischen doppelt-geschweiften Klammern eingebunden und, wenn nötig, mit einfachen Python-Befehlen angepasst werden. Nun kann das Template, die vom WSGI-Framework zur Verfügung gestellte Callback-Funktion befüllen und einen Response an den Web-Server schicken. Die fertige Seite ist beim Klienten im Browserfenster zum rendern bereit (vgl. Abbildung 2.2.). | |||||

| Um die URL, wie weiter oben erwähnt, aufzulösen, benötigt WSGI einen \textit {urlresolver}(vgl. ). Durch die explizite Zuweisung der vorhandenen Seiten, kann dieser über die regulären Ausdrücke der url.py-Datei iterieren. Gibt es eine Übereinstimmung, wird die damit verknüpfte Funktion in der View (view.py) aufgerufen. Hier ist die gesamte Logik der Website lokalisiert. Es ist möglich unter Anderem auf die Datenbank der Applikation zuzugreifen und Eingaben des Users über eine Form zu verarbeiten. Nachdem werden die Informationen der View an das Template weitergereicht. Es handelt sich dabei um eine einfache HTML-Seite in der der strukturelle Aufbau im Frontend festgelegt wird. Die Informationen der View können hier zwischen doppelt-geschweiften Klammern eingebunden und, wenn nötig, mit einfachen Python-Befehlen angepasst werden. Nun kann das Template, die vom WSGI-Framework zur Verfügung gestellte Callback-Funktion befüllen und einen Response an den Web-Server schicken. Die fertige Seite ist beim Klienten im Browserfenster zum rendern bereit (vgl. [Kin17], Abbildung 2.2.). | |||||

| \begin{figure}[!h] | \begin{figure}[!h] | ||||

| \centering | \centering | ||||

| \includegraphics[width=0.5\textwidth]{figures/request-response-cycle} | \includegraphics[width=0.5\textwidth]{figures/request-response-cycle} | ||||

| \caption{Request-Response-Kreislauf des Django Frameworks} | |||||

| \caption{Request-Response-Kreislauf des Django Frameworks ([Nev15])} | |||||

| \hfill | \hfill | ||||

| \end{figure} | \end{figure} | ||||

| \subsection {Besonderheiten} | |||||

| \subsection {Besonderheiten Django's} | |||||

| Das Django-Framework bringt einige Besonderheiten mit sich, die beim implementiern des Prototypen von Bedeutung sind. Diese werden im Folgenden beschrieben. | Das Django-Framework bringt einige Besonderheiten mit sich, die beim implementiern des Prototypen von Bedeutung sind. Diese werden im Folgenden beschrieben. | ||||

| Die Administratoroberfläche ist eines der hilfreichsten Werkzeugen des gesamten Frameworks. Es stellt die Metadaten der Modelle aus dem Code visuell dar. Verifizierte Benutzer können die Daten nicht nur schnell erfassen, sondern diese auch editieren und verwalten. Das Recht, das Admin-Backend uneingeschränkt zu benutzen, ist dem sogenannten superuser vorenthalten. Dieser kann beim erstmaligen zuweisen nur über die Kommandozeile eingerichtet werden. Ist bereits ein superuser vorhanden, kann dieser im Admin-Backend weiteren Benutzern den gleichen Handlungsfreiraum einräumen. Zudem gibt es noch weitere Stufen der Zugangsberechtigungen, Staff- und Active-Status, die für eine breitere Gruppe von Benutzern geeignet ist. | Die Administratoroberfläche ist eines der hilfreichsten Werkzeugen des gesamten Frameworks. Es stellt die Metadaten der Modelle aus dem Code visuell dar. Verifizierte Benutzer können die Daten nicht nur schnell erfassen, sondern diese auch editieren und verwalten. Das Recht, das Admin-Backend uneingeschränkt zu benutzen, ist dem sogenannten superuser vorenthalten. Dieser kann beim erstmaligen zuweisen nur über die Kommandozeile eingerichtet werden. Ist bereits ein superuser vorhanden, kann dieser im Admin-Backend weiteren Benutzern den gleichen Handlungsfreiraum einräumen. Zudem gibt es noch weitere Stufen der Zugangsberechtigungen, Staff- und Active-Status, die für eine breitere Gruppe von Benutzern geeignet ist. | ||||

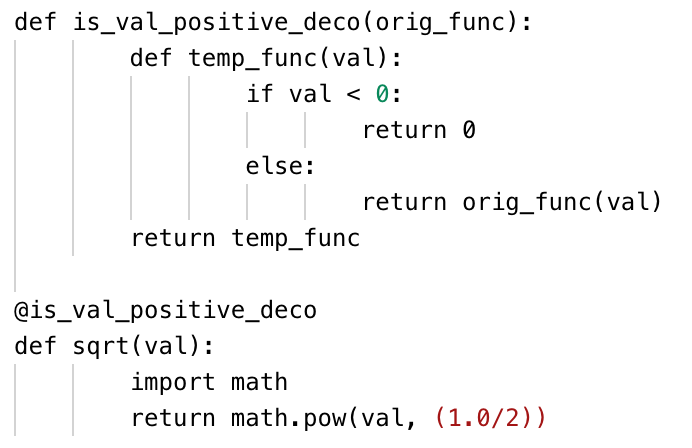

| Um die gestaffelten Zugangsberechtigungen auch auf der Website umsetzen zu können, stellt Django verschiedene Decorator zur Verfügung. Soll eine bestimmte Seite nur von eingeloggten Benutzern besucht werden dürfen, so importiert man die Decorator des, von Django zur Verfügung gestellten, Authentifizierungssystems mit | |||||

| Um die gestaffelten Zugangsberechtigungen auch auf der Website umsetzen zu können, stellt Django verschiedene Decorator zur Verfügung. Soll eine bestimmte Seite nur von eingeloggten Benutzern besucht werden, so importiert man die Decorator des, von Django zur Verfügung gestellten, Authentifizierungssystems mit | |||||

| \\ | \\ | ||||

| \noindent\hspace*{10mm}% | \noindent\hspace*{10mm}% | ||||

| from django.contrib.auth.decorators import login\_required | from django.contrib.auth.decorators import login\_required | ||||

| \\ | \\ | ||||

| Direkt über den Beginn der Funktion in view.py, oder auch single-view-function, wird zudem folgende Zeile ergänzt: | |||||

| Vor der Definition der Funktion wird dann folgende Zeile ergänzt: | |||||

| \\ | \\ | ||||